Teaching old codes new tricks

Increasing peak performance for weather modeling using GPUs

Apr 16, 2019 - by Laura Snider

Apr 16, 2019 - by Laura Snider

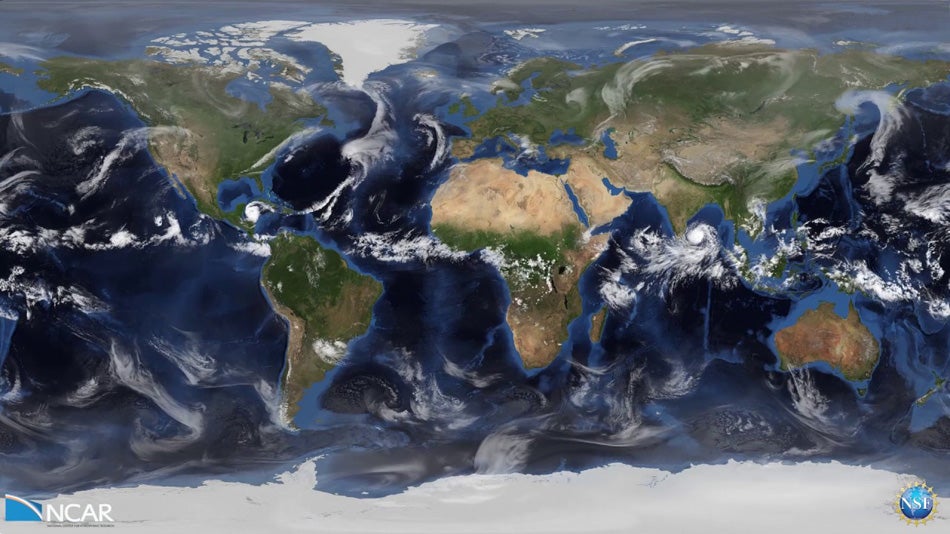

This image is from a simulation run on the Model for Prediction Across Scales (MPAS) at a high resolution. NCAR is working with The Weather Company to enable MPAS to run on graphical processing units (GPUs) with the goal of using fewer supercomputing resources. Image: UCAR.

Can a single weather model be run globally at a resolution high enough to start resolving individual thunderstorms — whether in the American Midwest, African rainforests, or anywhere else?

The answer depends on the horsepower of your supercomputer. Running extremely complicated models over the entire Earth at such high resolution is a herculean task for any machine, which has made a predictive global, storm-scale model impractical.

But now, the National Center for Atmospheric Research has partnered with The Weather Company, an IBM subsidiary, to experiment with a new technology that could make global, storm-scale forecasting a practical reality: graphical processing units, or GPUs.

GPUs were originally used to render 3D video games, but more recently they have been applied to a variety of interesting problems that require large amounts of computational resources to solve, including atmospheric modeling.

"GPUs are a very promising technology, and we want to see how big a role they might be able play in making modeling more efficient," said Rich Loft, a scientist at NCAR's Computational and Information Systems Laboratory (CISL). "I’m not dead certain that we’re going to succeed, but that’s why we call it research. We're doing something that not many people have had success with."

The payoff has the potential to be huge. IBM hopes the new approach could provide a nearly 200% improvement in the company's forecasting resolution for much of the globe. It also has the potential to provide forecasts for less technologically advanced countries that match the standards of more developed nations.

Complex models of weather, climate, and the larger Earth system have traditionally been run on supercomputers, such as the NCAR-operated Cheyenne system, that use central processing units, or CPUs. These processors are composed of a dozen or so powerful processing elements, or cores, capable of solving some of the most challenging mathematical equations within the models at a blistering pace. GPUs, on the other hand, have a different design.

"The idea is that even though the processing elements in a GPU can do fewer mathematical calculations per second individually, you could have a hundred times more of them than in a CPU," Loft said. "By working together, they might be able to achieve a higher sustained performance."

So a weather model might be able to run more quickly and more efficiently on a supercomputing system equipped with GPUs. But there's a catch: For this all to work, you have to find a way to spread the math represented in a model's code across 100 times more processing units. In other words, identify either a hundred times as much data to process, or 100 times as many equations to independently solve.

Loft is optimistic. Meteorological models, by their nature, involve solving equations to determine the simultaneous conditions at thousands — or millions — of points on a grid overlaying the forecast area. Atmospheric science, he says, may provide the ideal test case for the use of the emerging technology in scientific modeling.

The move to GPUs will not come easily. Since October 2017, NCAR has been working with The Weather Company to enable NCAR's next-generation global atmospheric model, MPAS —the Model for Prediction Across Scales — to run on GPUs.

The goal is to keep the original code intact so it's still usable on CPUs while also enabled to run on GPUs. This involves painstakingly combing through hundreds of thousands of lines of code and adding additional instructions aimed at GPU-enabled machines. Sometimes it also requires rewriting some of the original code to make it compatible for both architectures — a sticky proposition that can have unintended consequences for the way the model represents the world or for the code's performance efficiency on CPU machines.

"This is not a situation where computer scientists and software engineers can just go off and rewrite the code and hand it back to the atmospheric scientists," Loft said. "It requires the active participation of the people who are creating and using the models. This is one of the strengths of NCAR: We have both the scientists and the engineering capability."

NCAR's strong ties with the university community will help with the task. Loft has created partnerships with the University of Wyoming and the Korean Institute of Science and Technology Information (KISTI) to engage graduate students in GPU portability work. The students get experience working on GPU technology at the leading edge of their fields, and NCAR gets the additional capacity it needs to wade through the overwhelming lines of code.

If all goes according to plan, NCAR is slated to deliver the GPU-enabled code to The Weather Company later this year. Both NVIDIA Corporation, a manufacturer of GPUs, and its PGI compiler team are helping out on the MPAS project.

The NCAR team is also already applying what it's learned on MPAS to making other models more efficient, including one of the Sun that is used to simulate our neighboring star's complex magnetic fields and their violent eruptions. The code, named MURaM, is fantastically complicated, allowing scientists to bridge the massive variety of scales necessary to simulate the entire life cycle of a solar flare. The MURaM GPU project includes another university partnership, this time with a graduate student from the University of Delaware.

"A model this sophisticated — which is able to simulate both what is going on beneath the Sun's surface as well as in the solar corona — requires a vast amount of computing resources, which limits the amount of simulations we can run," said NCAR scientist Matthias Rempel, one of the model's creators. "By optimizing our model to run on GPUs, we are opening up the possibility of being able to do many more model runs and — most importantly — run them in real time, which is a prerequisite for modeling solar eruptions as they unfold on the Sun."