Can artificial intelligence make Earth system modeling more efficient?

NCAR explores the possible applications of machine learning

May 28, 2019 - by Laura Snider

May 28, 2019 - by Laura Snider

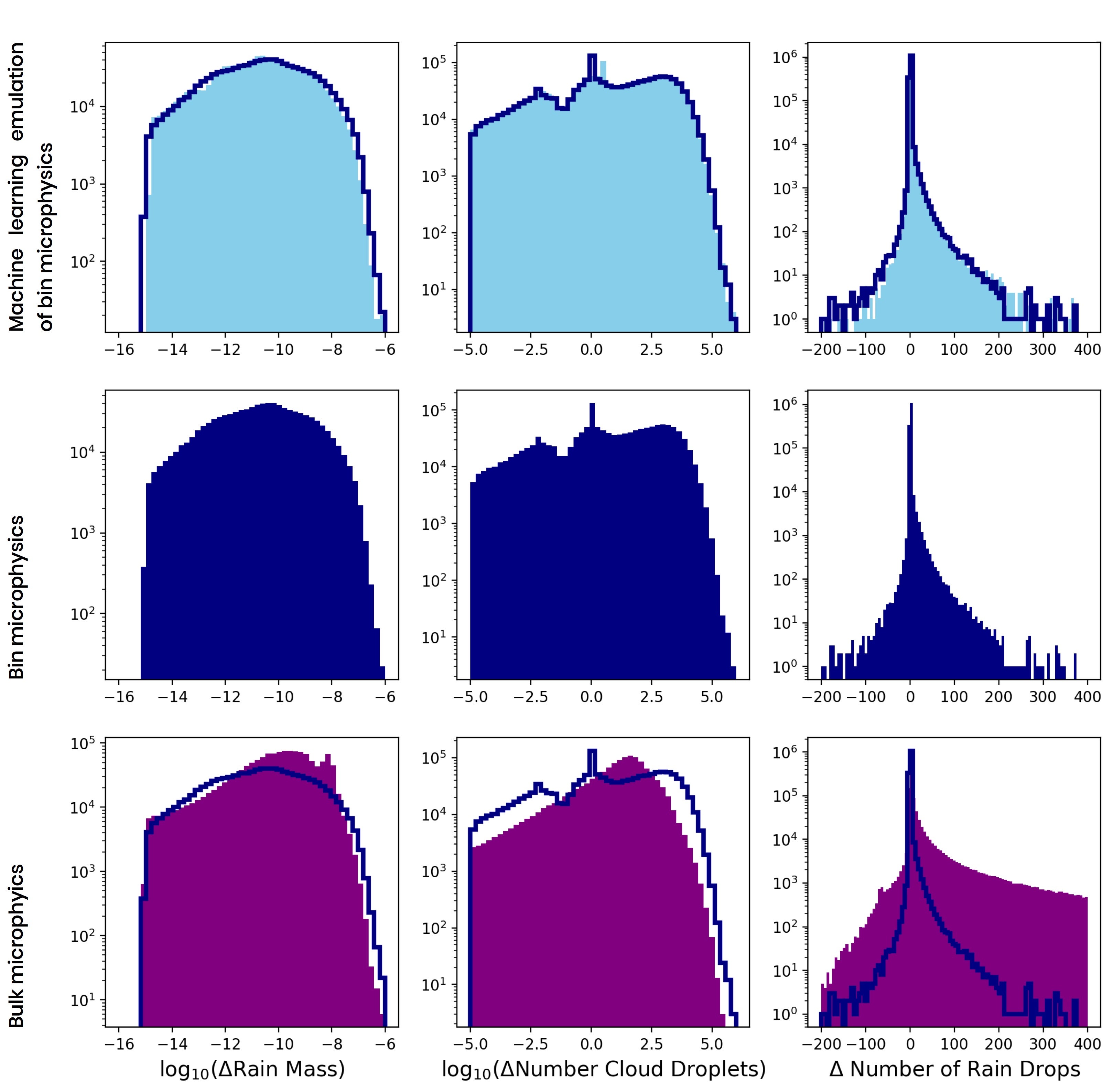

NCAR scientists used machine learning to emulate the results of the "bin microphysics" parameterization, a package of equations used to simulate the formation and evolution of clouds inside a climate model. While bin microphysics gives a more realistic representation of clouds than the simpler "bulk microphysics" scheme, scientists often cannot afford the computing resources needed to run bin microphysics for long periods of time. The use of machine learning may allow scientists to approximate the results of bin microphysics in a computationally efficient way. (©UCAR. Image: David John Gagne/NCAR)

To answer critical questions about the climate and how it's changing, scientists are pressing sophisticated Earth system models to solve increasingly complex equations. The result is more detailed simulations — and also more demand for the scarce supercomputing resources needed to run them.

Scientists at the National Center for Atmospheric Research (NCAR) are experimenting with using artificial intelligence to reduce the computational load. They hope machine learning can emulate some of the intricate physical processes that unfold in the atmosphere without having to solve all the complicated equations.

In one example, researchers are using machine-learning techniques to cut down on the huge amount of computational resources needed to realistically simulate the formation and evolution of clouds in the NCAR-based Community Earth System Model (CESM). Other efforts seek to improve aspects of the organization's weather, and solar models.

"We are exploring whether artificial intelligence can be applied to these massive scientific problems that are hitting walls due to limits on available supercomputing resources," said Anke Kamrath, director of NCAR's Computational and Information Systems Laboratory. "If we're successful, machine learning could boost model performance with the computing resources we already have."

The evolution of tiny ice and water particles inside clouds unfolds on a microscopic scale, much too small to be resolved by global climate models.

And yet climate models must be able to represent the formation and development of clouds — which reflect the Sun's heat back into space, trap warmth at the Earth's surface, and redistribute water around the globe — to accurately simulate the world's climate.

To do that, scientists have created parameterizations, or simplified approximations of the actual microphysics governing cloud behavior. The most realistic of these parameterization schemes, called bin microphysics, generates comparatively detailed estimates but requires vast amounts of computing resources when run on a global scale. That has meant that scientists largely rely on a simpler parameterization scheme, called bulk microphysics, when simulating the global climate over decades or centuries.

For the new machine-learning effort, NCAR scientists Andrew Gettelman and Jack Chen ran CESM for a 2-year simulated period using the computationally taxing bin microphysics parameterization. Then the machine-learning team set about seeing whether a "machine neural network" could mimic some of the bin microphysics processes related to the transition of cloud droplets into raindrops.

They fed the neural network the same inputs used by, and outputs created by, the bin microphysics parameterization. The idea was to see if the machine could identify patterns amid the glut of data and learn that a particular combination of inputs (the mass of cloud and rain droplets, for example) tends to yield a particular type of output (in this case, the change in the number of raindrops). By identifying the patterns of relationships between inputs and outputs, the scientists hoped the machine could emulate the bin microphysics results without solving the actual physics equations.

The early results look promising.

"Our big takeaway is that the neural network is able to produce results much closer to the bin microphysics scheme than you would get running the model with the simpler bulk microphysics," said David John Gagne, an NCAR machine-learning scientist working on the project. "We think this will allow models to more realistically simulate clouds in global climate model projections with the supercomputing resources already available."

The next big challenge is to figure out how to fit the neural network into the larger CESM model, giving scientists the option to use it or not, depending on their needs. CESM consists of smaller models that simulate the atmosphere, land surface, sea ice, and oceans, and replacing any feature must be done with care.

NCAR's machine learning team is also having success with other projects. In one, they are using a neural network to improve the mechanism in the NCAR-based Weather Research and Forecasting model (WRF) that exchanges information between the land surface and the atmosphere. The model's current "surface layer parameterization" scheme, which is used to compute the temperature near the ground, among other variables, is less accurate in mountainous regions and at night.

The scientists, including Gagne, Tyler McCandless, and Branko Kosovic, are training the machine neural networks to map observations of surface temperature to the model data. This will identify patterns that can then be used to make predictions of surface temperature from the model data alone.

Another project is using machine learning with the aim of speeding up solar models so that researchers can estimate the severity of a coronal mass ejection before it reaches Earth. That would allow scientists enough time to warn about possible space weather impacts to communication systems, satellites, and astronauts.

Currently, solar models are so complex that they cannot be run quickly enough to provide adequate warning. But the trained neural network may be able to estimate the severity of coronal mass ejections in a much more computationally efficient way.

These projects may just be the beginning when it comes to leveraging machine learning to help improve traditional modeling. Aside from speeding up the models themselves, machine learning can also help direct scientists' efforts to improve the model code, highlighting where small, directed improvements may make the biggest difference in model results.

"We are just beginning to explore the many varied applications of machine learning, and how neural networks may be able to supplement traditional model development," said CISL's Kamrath.