The rise of MPAS

NSF NCAR’s next-generation atmospheric model garners significant community interest

Jun 12, 2024 - by Laura Snider

Jun 12, 2024 - by Laura Snider

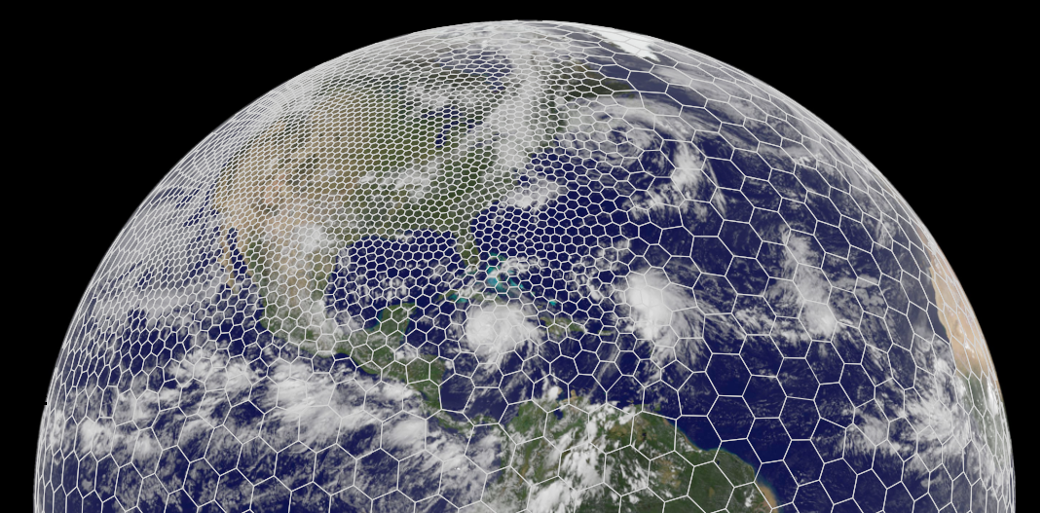

MPAS uses a honeycomb-like mesh allows the model to simultaneously simulate the atmosphere over some parts of the globe in high resolution, capturing small-scale weather phenomena like thunderstorms, and over other parts in low resolution, capturing large-scale atmospheric flow

A flexible, next-generation atmospheric model with the capability to accurately model weather, both regionally and globally, has been garnering increasing interest from user groups around the world, including weather forecasters, government agencies, climate scientists, private companies, and beyond.

The Model for Prediction Across Scales (MPAS) was developed by the U.S. National Science Foundation National Center for Atmospheric Research (NSF NCAR). It’s one of the tools the organization is investing in to help bridge a gap that exists between high-resolution regional weather modeling and low-resolution global climate modeling.

“MPAS development has been a priority for NSF NCAR because we believe in its potential to help connect the weather and climate research communities,” said NSF NCAR Director Everette Joseph. “For example, it’s imperative that we improve our ability to predict how climate change will affect severe weather at the community scale, and MPAS is one of the ways we are working toward doing that. I’m thrilled to see its use taking off in the community. As has always been the case, our models evolve and improve the more the community begins to use them and contribute back what they’ve learned. This is what community modeling is all about.”

MPAS’s promise lies, in part, in its unique grid system. The honeycomb-like mesh allows the model to simultaneously simulate the atmosphere over some parts of the globe in high resolution, capturing small-scale weather phenomena like thunderstorms, and over other parts in low resolution, capturing large-scale atmospheric flow. Practically speaking, this creates a tool that could both be useful as an atmospheric model for weather researchers, who typically run regional simulations at high resolution over days and weeks, and as an atmospheric component of an Earth system model for climate researchers, who typically run global simulations at low resolution over decades and even centuries.

Weather and climate research communities in the United States have historically relied on separate models, which has made it challenging to study how climate change affects weather in detail, and current climate and weather models are not well suited for the opposite community’s needs. For example, it’s challenging for scientists to run a climate model at extremely high resolution over a small area or to run a weather model globally on long timescales and get realistic looking outcomes. MPAS can’t fix all the problems, but NSF NCAR researchers say it has the potential to help bridge the existing gap.

Forecasters and researchers from across the spectrum of weather and climate communities are now actively using MPAS — either as a stand-alone model or by using just the model’s dynamical core as part of a different modeling system. The dynamical core is the heart of a model, and it solves the equations that describe how air and heat flow through the atmosphere. NOAA’s National Severe Storms Laboratory is experimenting with using the MPAS dynamical core for extremely high-resolution regional weather forecasts, for example, while researchers at NSF NCAR and their university collaborators are working to connect it to models of other Earth system components — including the ocean, land, and sea ice — to facilitate its use in climate science.

Since its early years, NSF NCAR has been developing state-of-the-art weather and climate models with the scientific community that have become mainstays for university researchers, government agencies, and the private sector. To ensure the models continue to be cutting edge, researchers often begin working on the next version of the model — or a new model altogether — while the older version is still in its prime.

NSF NCAR’s original community weather model was developed in collaboration with Penn State and called the Mesoscale Model (MM). In the mid-90s, the fifth generation of the model (MM5), which could be used for the first time on workstation computers (instead of a supercomputer), was one of the most used mesoscale models in the world. NSF NCAR held user workshops for the model and released thorough documentation, which enabled widespread adoption. But even at the height of its success, scientists recognized that the model itself was not ideal for going to higher and higher resolutions, a clear direction for future research.

So in the mid-90s, NSF NCAR scientists partnered with NOAA, the U.S. Air Force, the Naval Research Laboratory, the University of Oklahoma, and the Federal Aviation Administration to begin working on what would come next. The result, released in 2000, was the Weather Research and Forecasting Model (WRF), which has since supplanted MM5 as arguably the most used weather model in the world. There are more than 61,000 users who have registered to download WRF since its initial release, and more than 10,000 scientific publications have cited WRF.

WRF’s success is a direct result of its co-development with the community. As an open-source model, WRF is available to anyone for free, and university researchers, students, and others in the weather community — in addition to NSF NCAR researchers — have gone on to use the model in an astounding number of ways. Versions of WRF are now used to model wildfire behavior, the amount of the Sun’s energy available for solar power production on any given day, and snowpack characteristics and the resulting avalanche risk, among many other applications. WRF has also been adapted for use by NOAA forecasters to predict hurricane risks, severe thunderstorms, and flooding and inundation across America’s waterways.

While WRF has no problem going to extremely fine resolutions if you have the computing resources to allow it, scientists realized early on that WRF may not be the best suited model for the next generation of frontier science: going to high resolution across the entire globe. The issue with WRF is its grid, which is structured along lines of latitude and longitude. For most of the populated globe, this results in box-like structures, and the model solves equations in each box that determine atmospheric flow. However, this system has problems around the poles where the lines of longitude converge, resulting in narrow boxes that turn into triangles.

The issue this creates is known as the “pole problem.” While historically many global climate models have used a latitude-longitude grid system for their atmospheric components, they rely on patches and work-arounds to address the pole problem, a solution that works adequately at low resolution but breaks down at storm scales.

As NSF NCAR scientists began to search for a solution to the pole problem for global-scale weather modeling, they revisited a grid structure that had been tinkered with for atmospheric applications for decades, including as a possible mesh for NSF NCAR’s global climate modeling efforts. The grid is called an unstructured Voronoi mesh, which for the purposes of MPAS is a mostly hexagonal grid system that gives the model the ability to seamlessly zoom in or out across different parts of the globe.

While the idea of using a Voronoi mesh is not new, scientists previously struggled with developing the complex math that would be necessary to get atmospheric models to work in the hexagonal formations. In particular, they were stumped by how to get the models to accurately simulate the Coriolis effect, which is important for atmospheric flow, including making hurricanes spin. The breakthrough that allowed MPAS development to proceed happened when NSF NCAR scientists Bill Skamarock and Joe Klemp joined forces with two other researchers, John Thuburn from the University of Exeter and Todd Ringler from Los Alamos National Labs (LANL), who happened to also be working on Voronoi meshes at the same time.

Together, they found a mathematical solution and published a paper on it in 2009. Afterward, NSF NCAR and LANL both went on to begin developing versions of MPAS — NSF NCAR’s applied to the atmosphere while LANL’s is an ocean model. In June 2013, version 1.0 of MPAS-Atmosphere was released to the community.

In the intervening years, weather and climate scientists have both been working on MPAS development. On the weather side, the focus has been to get MPAS on par with WRF in its ability to accurately predict the weather. In 2019, NSF NCAR released a regional version of MPAS that allowed weather developers to test and refine MPAS at high resolutions over limited areas more efficiently, with the idea that innovations and improvements will feed back to the global model.

In the last few years, the regional model has made significant strides, thanks in part to weather forecasters. Daily use and testing by the people who are tasked with issuing forecasts to the public quickly exposes what is working well and, importantly, what is not. In particular, meteorologists at NOAA’s National Severe Storms Laboratory (NSSL) in Oklahoma have been testing the MPAS dynamical core to see how it would perform as part of their Warn-on-Forecast system, a project that aims to increase the lead time of warnings for tornadoes, severe thunderstorms, and flash floods.

Last spring, NSSL experimented with using MPAS dynamical core in configurations similar to the Rapid Refresh Forecast System, a model designed to provide extremely high-resolution forecasts of hazardous weather. It performed so well that the agency is considering a switch to the MPAS dynamical core for version 2 of the development project.

“To push model development forward, you really need someone to put it through its paces every day in forecast mode,” said Skamarock, who leads MPAS development at NSF NCAR. “As research meteorologists, we don’t do that. So having NOAA scientists and forecasters experimenting with MPAS during the Spring Experiment at the Hazardous Weather Testbed was vitally important. When you use it every day, you encounter the failures, and then we can identify problems and work on solutions.”

Other U.S. government agencies beyond NOAA are also interested in using MPAS, including the U.S. Air Force and the Environmental Protection Agency. Additionally, MPAS is being considered for use by weather services in other countries, including Taiwan and Brazil. The use of MPAS by government agencies who provide “operational” forecasts l, as well as by climate and weather researchers, also helps bridge a gap that has often existed between operational and research communities and smooths the way for research advances to make their way into official forecasts.

"Accelerating research to operations has been a priority in the U.S. for decades, as has been documented in numerous studies” Joseph said. “MPAS addresses this national need and represents an example of actionable science at NSF NCAR.”

While MPAS has recently come into its own in its ability to represent storms in high-resolution modeling efforts, it does not yet replace all the capabilities of WRF. But WRF’s incredible breadth of applications and capabilities is a result of many years of collaborations in which the community used and adapted the model and then contributed back what they created. Skamarock is confident that the same will happen with MPAS over time.

“Regional MPAS is only getting started,” Skamarock said. “The community contributions are just starting to come in and it’s really exciting to see the ideas and interest from our users.”

As with the community models that preceded MPAS, NSF NCAR actively encourages and supports community use of MPAS by providing training, documentation, and opportunities for the modeling community to come together to share and discuss their work using MPAS. This support, including yearly tutorials and workshops, is vital for encouraging community co-development, which in turn, is critical to pushing the model forward.

NSF NCAR also recently announced that it is no longer actively developing WRF — though they will continue to support the model for now. Instead, their development focus has shifted entirely to MPAS.

Simultaneously, work is ongoing to make the MPAS dynamical core available for the atmospheric component in global Earth system models to enable storm-scale climate simulations. Two projects — one led by NSF NCAR and one led by Colorado State University — are attacking the problem from different angles. However, both projects aim to use the NSF NCAR-based Community Earth System Model (CESM) as the framework that will connect the different modeling components together and they are collaborating to address challenging technical problems as they arise.

The first project, led by NSF NCAR, is called the System for Integrated Modeling of the Atmosphere (SIMA). This project is creating a framework that will allow scientists to customize an atmospheric modeling component within CESM. They plan to include the MPAS dynamical core as one choice among several for researchers to choose from.

The second project is led by Colorado State University and is known as EarthWorks. EarthWorks seeks to connect the MPAS dynamical core to two other sister models that use the same grid system — MPAS-Ocean and the recently released MPAS-Seaice models — using the CESM framework.

EarthWorks has encountered a number of challenges, many of which are related to software engineering and computing infrastructure, including running into limits with memory as resolution increases. Even so, in collaboration with the CESM and SIMA teams, significant progress has been made. A joint project across all three modeling efforts is now preparing to run a 40-day, high-resolution simulation with the model, known as DYAMOND, that allows the output to be compared apples-to-apples to other global storm-resolving models.

While EarthWorks is university-led, the lessons learned are being contributed back to NSF NCAR’s larger climate modeling efforts and the CESM development community. In fact, the EarthWorks team includes NSF NCAR scientists from three of its labs, representing weather, climate, and computing research.

“From the beginning, the EarthWorks project has brought people together from different pieces of NSF NCAR, but with the university in the lead,” said David Randall, the EarthWorks principal investigator, a distinguished professor at CSU. “The team members have all brought different perspectives and different histories, and we’ve all worked together to successfully move this project forward.”

While EarthWorks and SIMA are both working with a global version of the MPAS dynamical core, EarthWorks is using a version of MPAS enabled to run on graphics processing units (GPUs). Traditionally, atmospheric and climate models have been developed to run on central processing units (CPUs). While scientists have half a century of experience programming CPUs for weather and climate models, they are still learning how to best use GPUs for that purpose. GPUs are less flexible than CPUs and are more difficult to program, but GPUs are potentially much more cost effective in terms of both computational speed and power consumption. The vast amount of computing resources necessary to run global high-resolution models is a challenge for scientific progress, and using the latest computing capabilities is critical.

The GPU-enabled version of MPAS was first developed as part of a partnership with IBM and The Weather Company, which is using the model as the basis of its Global High-Resolution Atmospheric Forecasting System (GRAF). This was one of the earliest efforts at refactoring global model code developed for CPUs to work on GPUs, and many valuable lessons were learned that can be applied to other projects in the future.

In the meantime, development continues across all these projects, and NSF NCAR and its partners are making steady progress on MPAS.

“In the past year, it feels like a corner has been turned,” said Gretchen Mullendore, the director of NSF NCAR’s Mesoscale and Microscale Meteorology Laboratory, where MPAS development began. “Our community is showing up for tutorials and workshops and scientific meetings to talk about how MPAS is already being used and what the future possibilities are. I am really excited to see where we go from here and to harness the creativity and expertise of our user community to build the best model possible.”